Aim

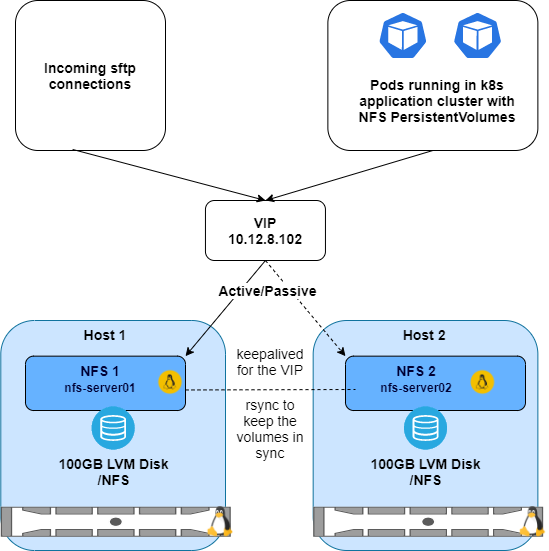

Create a simple NFS HA cluster on RHEL7 VMs with local storage as shown below. The VMs run as guests on a RHEL 8 server running KVM. Connections with be made from the local network and pods running in a Kubernetes cluster will mount as PersistentVolumes.

Also I will create a SFTP chroot jail for incoming client sftp connections.

Prerequisites

Install and enable nfs, keepalived and rsync packages

sudo yum install -y nfs-utils keepalived rsync

sudo systemctl enable nfs-server

sudo systemctl enable keepalived

keepalived --versionGet IP info

[istacey@nfs-server01 ~]$ ip --brief a s

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 10.12.6.111/25 fe80::5054:ff:fe79:79b3/64

eth1 UP 192.168.112.111/24 fe80::5054:ff:fe06:8dc5/64

eth2 UP 10.12.8.103/28 fe80::5054:ff:fec6:428f/64

[istacey@nfs-server02 ~]$ ip --brief a s

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 10.12.6.112/25 fe80::5054:ff:fef5:765e/64

eth1 UP 192.168.112.112/24 fe80::5054:ff:fead:fa64/64

eth2 UP 10.12.8.104/28 fe80::5054:ff:fef5:13de/64

VIP DETAILS:

VIP – NSF nfsvip 10.12.8.102

NSF_01 nfs-server01 10.12.8.103

NSF_02 nfs-server02 10.12.8.104

Configure keepalived

Server 1

[istacey@nfs-server01 ~]$ cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

vrrp_instance VI_1 {

state MASTER

interface eth2

virtual_router_id 51

priority 255

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.12.8.102

}

}

Server 2:

[istacey@nfs-server02 ~]$ cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

vrrp_instance VI_1 {

state BACKUP

interface eth2

virtual_router_id 51

priority 254

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.12.8.102

}

}Test with ping:

[istacey@nfs-server02 ~]$ ping 10.12.8.102

PING 10.12.8.102 (10.12.8.102) 56(84) bytes of data.

From 10.12.8.104 icmp_seq=1 Destination Host Unreachable

From 10.12.8.104 icmp_seq=2 Destination Host Unreachable

From 10.12.8.104 icmp_seq=3 Destination Host Unreachable

From 10.12.8.104 icmp_seq=4 Destination Host Unreachable

^C

--- 10.12.8.102 ping statistics ---

4 packets transmitted, 0 received, +4 errors, 100% packet loss, time 2999ms

pipe 4

[istacey@nfs-server02 ~]$

[istacey@nfs-server01 ~]$ sudo systemctl start keepalived

[istacey@nfs-server02 ~]$ sudo systemctl start keepalived

[istacey@nfs-server02 ~]$ ping 10.12.8.102

PING 10.12.8.102 (10.12.8.102) 56(84) bytes of data.

64 bytes from 10.12.8.102: icmp_seq=1 ttl=64 time=0.123 ms

64 bytes from 10.12.8.102: icmp_seq=2 ttl=64 time=0.116 ms

64 bytes from 10.12.8.102: icmp_seq=3 ttl=64 time=0.104 msShow VIP:

[istacey@nfs-server01 ~]$ ip --brief a s

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 10.12.6.111/25 fe80::5054:ff:fe79:79b3/64

eth1 UP 192.168.112.111/24 fe80::5054:ff:fe06:8dc5/64

eth2 UP 10.12.8.103/28 10.12.8.102/32 fe80::5054:ff:fec6:428f/64

[istacey@nfs-server01 ~]$Create SFTP chroot jail

Create the sftpusers group and the user on both servers:

[istacey@nfs-server01 ~]$ sudo groupadd -g 15000 nfsrsync

[istacey@nfs-server01 ~]$ sudo groupadd -g 15001 vmnfs1

[istacey@nfs-server01 ~]$ sudo groupadd -g 15002 sftpusers

[istacey@nfs-server01 ~]$ sudo useradd -u 15000 -g nfsrsync nfsrsync

[istacey@nfs-server01 ~]$ sudo useradd -u 15001 -g vmnfs1 vmnfs1

[istacey@nfs-server01 ~]$ sudo usermod -aG sftpusers,nfsrsync vmnfs1

[istacey@nfs-server01 ~]$ sudo mkdir /NFS/vmnfs1

[istacey@nfs-server01 ~]$ sudo mkdir /NFS/vmnfs1/home

[istacey@nfs-server01 ~]$ sudo mkdir /NFS/vmnfs1/home/voucher-management

[istacey@nfs-server01 ~]$ sudo chown vmnfs1:sftpusers /NFS/vmnfs1/homeNote, change permission for the users chrooted “home” directory only. It’s important to leave everything else with the default root permissions.

[istacey@nfs-server01 ~]$ find /NFS -type d -exec ls -ld {} \;

drwxr-xr-x. 3 root root 20 Jul 20 15:52 /NFS

drwxr-xr-x 3 root root 18 Jul 20 15:00 /NFS/vmnfs1

drwxr-xr-x 3 vmnfs1 sftpusers 52 Jul 20 15:16 /NFS/vmnfs1/home

drwxrwxrwx 2 vmnfs1 nfsrsync 59 Jul 20 15:31 /NFS/vmnfs1/home/voucher-managementUpdate ssh and restart the service:

[istacey@nfs-server01 ~]$ sudo vi /etc/ssh/sshd_config

[istacey@nfs-server01 ~]$ sudo cat /etc/ssh/sshd_config | grep Subsys -A3

#Subsystem sftp /usr/libexec/openssh/sftp-server

Subsystem sftp internal-sftp -d /home

Match Group sftpusers

ChrootDirectory /NFS/%u

ForceCommand internal-sftp -d /home/voucher-management

[istacey@nfs-server01 ~]$ sudo systemctl restart sshd

Note: the ForceCommand option drops the sftp user into a subdirectory

To test first check ssh, this should throw an error:

[istacey@nfs-server02 ~]$ ssh vmnfs1@nfs-server01

vmnfs1@nfs-server01's password:

Last login: Tue Jul 20 15:13:33 2021 from nfs-server02-om.ocs.a1.hr

/bin/bash: No such file or directory

Connection to nfs-server01 closed.

[istacey@nfs-server02 ~]$

OR:

[istacey@nfs-server02 ~]$ ssh vmnfs1@nfs-server01

vmnfs1@nfs-server01's password:

This service allows sftp connections only.

Connection to nfs-server01 closed.

[istacey@nfs-server02 ~]$The user can no longer connect via ssh. Let’s try sftp:

[istacey@nfs-server02 ~]$ sftp vmnfs1@nfs-server01

vmnfs1@nfs-server01's password:

Connected to nfs-server01.

sftp> pwd

Remote working directory: /home/voucher-management

sftp> ls

testfile testfile1 testfiledate

sftp> quit

[istacey@nfs-server02 ~]$As required the user is dropped into the /home/voucher-management (/NFS/vmnfs1/home/voucher-management/ on the server).

Finally make sure a regular user can still log in via ssh without the chroot restrictions and we’re done with this part, successfully configuring the sftp server with a jailed chroot user.

Configure rsync

As we are only using local storage and not shared storage, we will synchronize the folders with rsync

On both servers I created a user account called nfsrsync, verified folder owership and permissions, generated and copied ssh keys.

[nfsrsync@nfs-server01 ~]$ ssh-keygen -t rsa

[nfsrsync@nfs-server01 .ssh]$ cp id_rsa.pub authorized_keys

[nfsrsync@nfs-server01 ~]$ ssh-copy-id nfs-server02

[nfsrsync@nfs-server01 .ssh]$ scp id_rsa* nfs-server02:~/.ssh/Add cron job to run rsync in both directions with a push. I chose not to run rsync as a daemon for this solution

[nfsrsync@nfs-server01 ~]$ crontab -l

*/5 * * * * rsync -rt /NFS/vmnfs1/home/voucher-management/ nfsrsync@nfs-server02:/NFS/vmnfs1/home/voucher-management/

[nfsrsync@nfs-server02 ~]$ crontab -l

*/5 * * * * rsync -rt /NFS/vmnfs1/home/voucher-management/ nfsrsync@nfs-server01:/NFS/vmnfs1/home/voucher-management/

[nfsrsync@nfs-server02 ~]$Configure NFS

On both servers:

[istacey@nfs-server01 ~]$ sudo vi /etc/exports

[istacey@nfs-server01 ~]$ cat /etc/exports

/NFS/vmnfs1/home/voucher-management *(rw,no_root_squash)

[istacey@nfs-server01 ~]$ sudo systemctl start nfs-serverVerify with showmount and test mounting the share, from server 2:

[istacey@nfs-server02 ~]$ sudo mount nfs-server01:/NFS/vmnfs1/home/voucher-management /mnt

[istacey@nfs-server02 ~]$ df -h /mnt

Filesystem Size Used Avail Use% Mounted on

nfs-server01:/NFS/vmnfs1/home/voucher-management 100G 33M 100G 1% /mnt

[istacey@nfs-server02 ~]$ mount | grep nfs4

nfs-server01:/NFS/vmnfs1/home/voucher-management on /mnt type nfs4 (rw,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.12.6.112,local_lock=none,addr=10.12.6.111)

[istacey@nfs-server02 ~]$

[istacey@nfs-server02 ~]$ find /mnt

/mnt

/mnt/testfile

/mnt/testfile1

/mnt/testfiledate

[istacey@nfs-server02 ~]$And we are done.

References

Keepalived: https://www.redhat.com/sysadmin/keepalived-basics

rsync: https://www.atlantic.net/vps-hosting/how-to-use-rsync-copy-sync-files-servers/

chroot jail: https://access.redhat.com/solutions/2399571 , ForceCommand: https://serverfault.com/questions/704869/forward-sftp-user-to-chroot-subdirectory-after-authentication