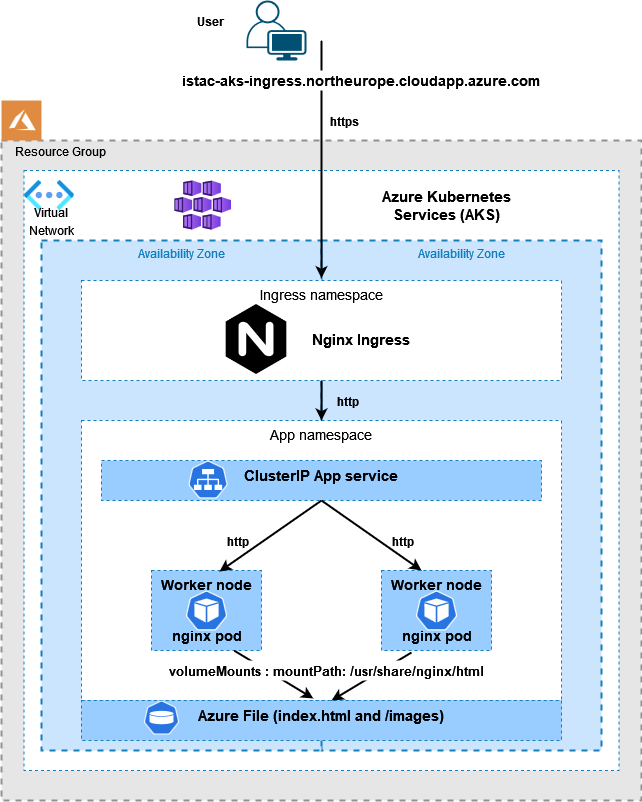

Aim:

Publish website in new AKS (Azure Kubernetes Service) cluster, initially following the tutorial at https://docs.microsoft.com/en-us/azure/aks/tutorial-kubernetes-prepare-acr?tabs=azure-cli

Create the Azure Resources:

`This tutorial requires that you’re running the Azure CLI version 2.0.53 or later. Run az –version to find the version.

Use ‘az login’ to log into the Azure account:

az loginThe CLI will open your default browser, and load an Azure sign-in page, I have two-factor authentication enabled and need my phone to confirm access.

Create a new Resource Group:

az group create --name AKS4BIM02 --location northeuropeCreate an Azure Container Registry:

I will not use this initially, but I will create for future use.

az acr create --resource-group AKS4BIM02 --name acr4BIM --sku Basic

## List images in registry (None yet)

az acr repository list --name acr4BIM --output tableCreate the AKS cluster:

See options described at the following links:

- https://docs.microsoft.com/en-us/azure/aks/tutorial-kubernetes-deploy-cluster?tabs=azure-cli

- https://docs.microsoft.com/en-us/azure/aks/availability-zones

istacey@DUB004043:~$ az aks create \

> --resource-group AKS4BIM02 \

> --name AKS4BIM02 \

> --node-count 2 \

> --generate-ssh-keys \

> --zones 1 2Connect to the cluster:

If necessary install the Kubernetes CLI (az aks install-cli) and then connect:

$ az aks get-credentials --resource-group AKS4BIM02 --name AKS4BIM02

Merged "AKS4BIM02" as current context in /home/istacey/.kube/config

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

aks-nodepool1-88432225-vmss000000 Ready agent 7m22s v1.20.9 10.240.0.4 <none> Ubuntu 18.04.6 LTS 5.4.0-1059-azure containerd://1.4.9+azure

aks-nodepool1-88432225-vmss000001 Ready agent 7m17s v1.20.9 10.240.0.5 <none> Ubuntu 18.04.6 LTS 5.4.0-1059-azure containerd://1.4.9+azureCreate Storage Account and Azure File share

Following https://docs.microsoft.com/en-us/azure/aks/azure-files-volume

#Set variables:

AKS_PERS_STORAGE_ACCOUNT_NAME=istacestorageacct01

AKS_PERS_RESOURCE_GROUP=AKS4BIM02

AKS_PERS_LOCATION=northeurope

AKS_PERS_SHARE_NAME=aksshare4bim02

az storage account create -n $AKS_PERS_STORAGE_ACCOUNT_NAME -g $AKS_PERS_RESOURCE_GROUP -l $AKS_PERS_LOCATION --sku Standard_LRS

export AZURE_STORAGE_CONNECTION_STRING=$(az storage account show-connection-string -n $AKS_PERS_STORAGE_ACCOUNT_NAME -g $AKS_PERS_RESOURCE_GROUP -o tsv)

echo $AZURE_STORAGE_CONNECTION_STRING

az storage share create -n $AKS_PERS_SHARE_NAME --connection-string $AZURE_STORAGE_CONNECTION_STRING

STORAGE_KEY=$(az storage account keys list --resource-group $AKS_PERS_RESOURCE_GROUP --account-name $AKS_PERS_STORAGE_ACCOUNT_NAME --query "[0].value" -o tsv)

echo Storage account name: $AKS_PERS_STORAGE_ACCOUNT_NAME

echo $STORAGE_KEY

Create a Kubernetes secret:

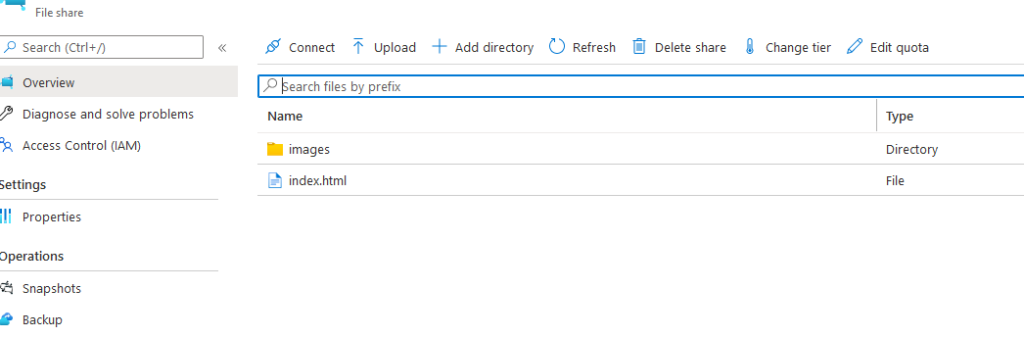

kubectl create secret generic azure-secret --from-literal=azurestorageaccountname=$AKS_PERS_STORAGE_ACCOUNT_NAME --from-literal=azurestorageaccountkey=$STORAGE_KEYUpload index.html and images folder to file share via Azure Portal

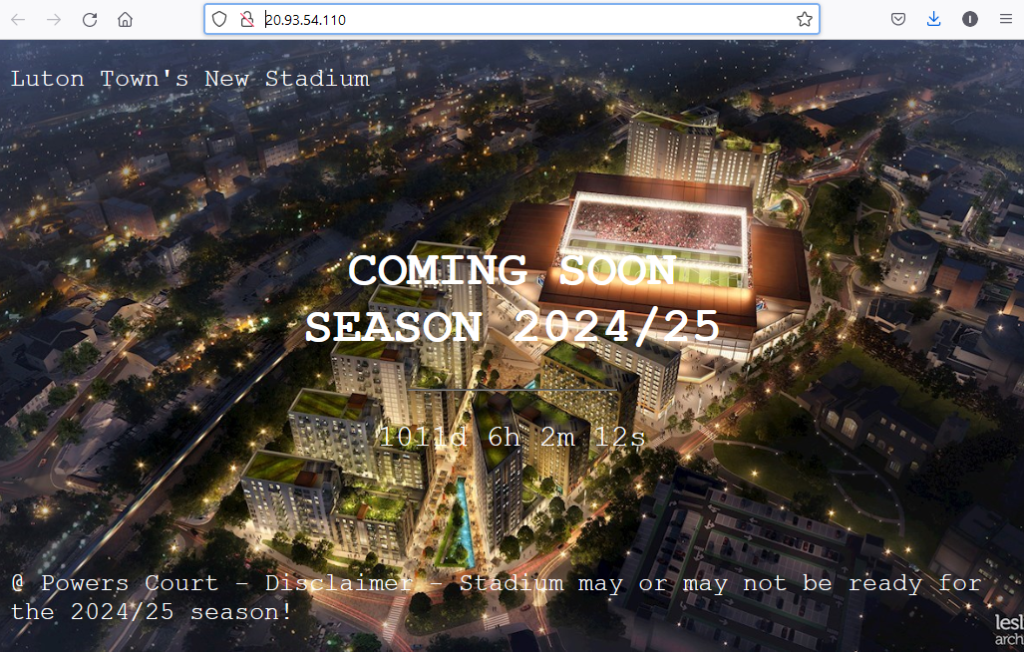

index.html based on https://www.w3schools.com/howto/howto_css_coming_soon.asp and related to one of my favorite subjects Luton Town FC! 🙂

Create the Kubernetes Deployment

Create new namespace:

kubectl create ns isnginxCreate new deployment yaml manifest file:

$ vi nginx-deployment.yaml

$ cat nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: isnginx-deployment

namespace: isnginx

labels:

app: isnginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: isnginx-deployment

template:

metadata:

labels:

app: isnginx-deployment

spec:

containers:

- name: isnginx-deployment

image: nginx

volumeMounts:

- name: nginxtest01

mountPath: /usr/share/nginx/html

volumes:

- name: nginxtest01

azureFile:

secretName: azure-secret

shareName: aksshare4bim02

readOnly: falseCreate the deployment:

$ kubectl create -f nginx-deployment.yaml

deployment.apps/isnginx-deployment created

$ kubectl rollout status deployment isnginx-deployment -n isnginx

Waiting for deployment "isnginx-deployment" rollout to finish: 0 of 2 updated replicas are available...

$ kubectl -n isnginx describe pod isnginx-deployment-5ff78ff678-7dphq | tail -5

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m32s default-scheduler Successfully assigned isnginx/isnginx-deployment-5ff78ff678-7dphq to aks-nodepool1-88432225-vmss000000

Warning FailedMount 29s kubelet Unable to attach or mount volumes: unmounted volumes=[nginxtest01], unattached volumes=[default-token-lh6xf nginxtest01]: timed out waiting for the condition

Warning FailedMount 24s (x9 over 2m32s) kubelet MountVolume.SetUp failed for volume "aksshare4bim02" : Couldn't get secret isnginx/azure-secretMount failing as secret created in the default namespace.

Create a secret in the isnginx namespace:

kubectl -n isnginx create secret generic azure-secret --from-literal=azurestorageaccountname=$AKS_PERS_STORAGE_ACCOUNT_NAME --from-literal=azurestorageaccountkey=$STORAGE_KEY$ kubectl -n isnginx get secret

NAME TYPE DATA AGE

azure-secret Opaque 2 97m

default-token-lh6xf kubernetes.io/service-account-token 3 104mRemove the deployment and recreate:

$ kubectl delete -f nginx-deployment.yaml

deployment.apps "isnginx-deployment" deleted

$ kubectl create -f nginx-deployment.yaml

deployment.apps/isnginx-deployment created

$ kubectl rollout status deployment isnginx-deployment -n isnginx

Waiting for deployment "isnginx-deployment" rollout to finish: 0 of 2 updated replicas are available...

Waiting for deployment "isnginx-deployment" rollout to finish: 1 of 2 updated replicas are available...

deployment "isnginx-deployment" successfully rolled out$ kubectl -n isnginx get all

NAME READY STATUS RESTARTS AGE

pod/isnginx-deployment-6b8d9db99c-2kj5l 1/1 Running 0 80s

pod/isnginx-deployment-6b8d9db99c-w65gp 1/1 Running 0 80s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/isnginx-deployment 2/2 2 2 80s

NAME DESIRED CURRENT READY AGE

replicaset.apps/isnginx-deployment-6b8d9db99c 2 2 2 80sCreate service / Expose deployment

And get external IP:

$ kubectl -n isnginx expose deployment isnginx-deployment --port=80 --type=LoadBalancer

service/isnginx-deployment exposed

$ kubectl -n isnginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

isnginx-deployment LoadBalancer 10.0.110.58 20.93.54.110 80:30451/TCP 95mTest with curl and web browser:

$ curl http://20.93.54.110 | grep -i luton

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2139 100 2139 0 0 44562 0 --:--:-- --:--:-- --:--:-- 45510

<p>Luton Town's New Stadium</p>

Create HTTPS ingress:

AIM: Create HTTPS ingress with signed certificate and redirect http requests to HTTPS. Following https://docs.microsoft.com/en-us/azure/aks/ingress-tls

Create Ingress controller:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.4/deploy/static/provider/cloud/deploy.yaml$ kubectl -n ingress-nginx get all

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-twnd7 0/1 Completed 0 8m3s

pod/ingress-nginx-admission-patch-vnsj4 0/1 Completed 1 8m3s

pod/ingress-nginx-controller-5d4b6f79c4-mknxc 1/1 Running 0 8m4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.0.77.11 20.105.96.112 80:31078/TCP,443:31889/TCP 8m4s

service/ingress-nginx-controller-admission ClusterIP 10.0.2.37 <none> 443/TCP 8m4s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 8m4s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-5d4b6f79c4 1 1 1 8m4s

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 1s 8m3s

job.batch/ingress-nginx-admission-patch 1/1 3s 8m3s

Add an A record to DNS zone

$ IP=20.105.96.112

$ DNSNAME="istac-aks-ingress"

$ PUBLICIPID=$(az network public-ip list --query "[?ipAddress!=null]|[?contains(ipAddress, '$IP')].[id]" --output tsv)

$ az network public-ip update --ids $PUBLICIPID --dns-name $DNSNAME

$ az network public-ip show --ids $PUBLICIPID --query "[dnsSettings.fqdn]" --output tsv

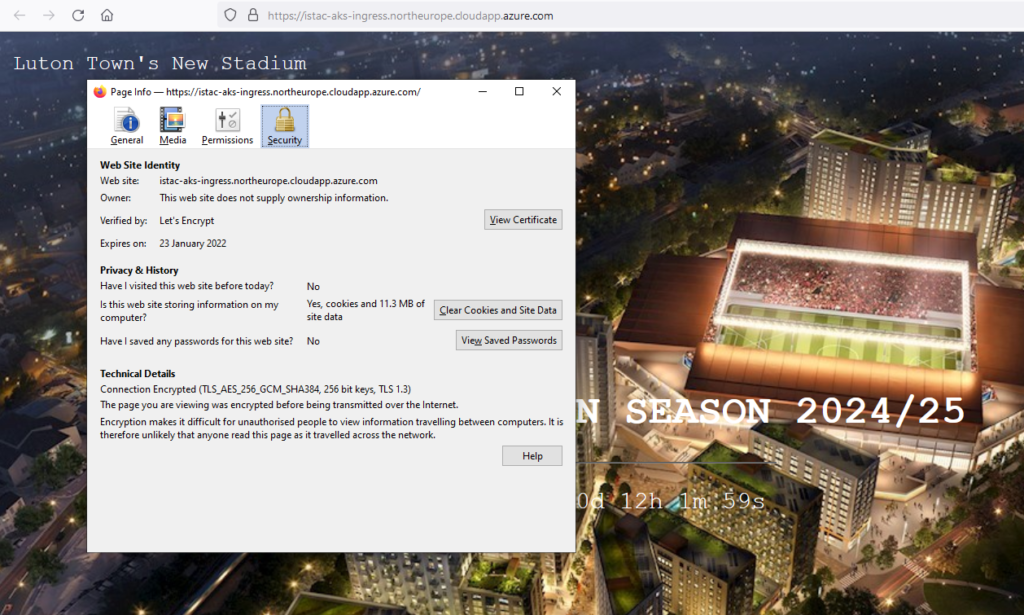

istac-aks-ingress.northeurope.cloudapp.azure.com

$ az network public-ip show --ids $PUBLICIPID --query "[dnsSettings.fqdn]" --output tsv | nslookup

Server: 89.101.160.4

Address: 89.101.160.4#53

Non-authoritative answer:

Name: istac-aks-ingress.northeurope.cloudapp.azure.com

Address: 20.105.96.112Install cert-manager with helm:

$ kubectl label namespace ingress-nginx cert-manager.io/disable-validation=true

namespace/ingress-nginx labeled

$ helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "jetstack" chart repository

Update Complete. ⎈Happy Helming!⎈$ helm install cert-manager jetstack/cert-manager \

> --namespace ingress-nginx \

> --set installCRDs=true \

> --set nodeSelector."kubernetes\.io/os"=linux

NAME: cert-manager

LAST DEPLOYED: Mon Oct 25 10:56:51 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager v1.5.4 has been deployed successfully!$ kubectl -n ingress-nginx get all

NAME READY STATUS RESTARTS AGE

pod/cert-manager-88ddc7f8d-ltz9z 1/1 Running 0 158m

pod/cert-manager-cainjector-748dc889c5-kcdx7 1/1 Running 0 158m

pod/cert-manager-webhook-55dfcc5474-tbrz2 1/1 Running 0 158m

pod/ingress-nginx-admission-create-twnd7 0/1 Completed 0 3h45m

pod/ingress-nginx-admission-patch-vnsj4 0/1 Completed 1 3h45m

pod/ingress-nginx-controller-5d4b6f79c4-mknxc 1/1 Running 0 3h45m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cert-manager ClusterIP 10.0.7.50 <none> 9402/TCP 158m

service/cert-manager-webhook ClusterIP 10.0.132.151 <none> 443/TCP 158m

service/ingress-nginx-controller LoadBalancer 10.0.77.11 20.105.96.112 80:31078/TCP,443:31889/TCP 3h45m

service/ingress-nginx-controller-admission ClusterIP 10.0.2.37 <none> 443/TCP 3h45m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cert-manager 1/1 1 1 158m

deployment.apps/cert-manager-cainjector 1/1 1 1 158m

deployment.apps/cert-manager-webhook 1/1 1 1 158m

deployment.apps/ingress-nginx-controller 1/1 1 1 3h45m

NAME DESIRED CURRENT READY AGE

replicaset.apps/cert-manager-88ddc7f8d 1 1 1 158m

replicaset.apps/cert-manager-cainjector-748dc889c5 1 1 1 158m

replicaset.apps/cert-manager-webhook-55dfcc5474 1 1 1 158m

replicaset.apps/ingress-nginx-controller-5d4b6f79c4 1 1 1 3h45m

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 1s 3h45m

job.batch/ingress-nginx-admission-patch 1/1 3s 3h45mCreate a CA cluster issuer:

$ vi cluster-issuer.yaml

$ cat cluster-issuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: ian@ianstacey.net

privateKeySecretRef:

name: letsencrypt

solvers:

- http01:

ingress:

class: nginx

podTemplate:

spec:

nodeSelector:

"kubernetes.io/os": linux

$ kubectl apply -f cluster-issuer.yaml

clusterissuer.cert-manager.io/letsencrypt createdCreate an ingress route:

$ cat http7-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: powercourt-ingress

namespace: isnginx

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

cert-manager.io/cluster-issuer: letsencrypt

spec:

tls:

- hosts:

- istac-aks-ingress.northeurope.cloudapp.azure.com

secretName: tls-secret

defaultBackend:

service:

name: isnginx-clusterip

port:

number: 80

$ kubectl apply -f http7-ingress.yaml

ingress.networking.k8s.io/powercourt-ingress configuredCheck resources:

$ kubectl -n isnginx describe ingress powercourt-ingress

Name: powercourt-ingress

Namespace: isnginx

Address: 20.105.96.112

Default backend: isnginx-clusterip:80 (10.244.0.7:80,10.244.1.4:80)

TLS:

tls-secret terminates istac-aks-ingress.northeurope.cloudapp.azure.com

Rules:

Host Path Backends

---- ---- --------

* * isnginx-clusterip:80 (10.244.0.7:80,10.244.1.4:80)

Annotations: cert-manager.io/cluster-issuer: letsencrypt

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/use-regex: true

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 13m (x10 over 3h51m) nginx-ingress-controller Scheduled for sync

Normal UpdateCertificate 13m (x3 over 25m) cert-manager Successfully updated Certificate "tls-secret"

$ kubectl get certificate tls-secret --namespace isnginx

NAME READY SECRET AGE

tls-secret True tls-secret 144m

$ kubectl get certificate tls-secret --namespace isnginx -o yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

creationTimestamp: "2021-10-25T10:17:02Z"

generation: 4

name: tls-secret

namespace: isnginx

ownerReferences:

- apiVersion: networking.k8s.io/v1

blockOwnerDeletion: true

controller: true

kind: Ingress

name: powercourt-ingress

uid: e93a8939-1ed6-444b-b2db-b26aedf01dd8

resourceVersion: "122626"

uid: 79631ea4-541b-4251-9c7b-ad5294e6bbc0

spec:

dnsNames:

- istac-aks-ingress.northeurope.cloudapp.azure.com

issuerRef:

group: cert-manager.io

kind: ClusterIssuer

name: letsencrypt

secretName: tls-secret

usages:

- digital signature

- key encipherment

status:

conditions:

- lastTransitionTime: "2021-10-25T12:28:08Z"

message: Certificate is up to date and has not expired

observedGeneration: 4

reason: Ready

status: "True"

type: Ready

notAfter: "2022-01-23T11:28:06Z"

notBefore: "2021-10-25T11:28:07Z"

renewalTime: "2021-12-24T11:28:06Z"

revision: 4Test:

http requests to http://istac-aks-ingress.northeurope.cloudapp.azure.com are successfully redirected to https:

The finished architecture: